Docker containers have revolutionized the way DevOps processes are executed. They provide a lightweight and efficient way to set up and manage applications. In this article, we will explore the advantages of using Docker containers in DevOps, set up and manage applications, and the best practices for container deployment.

Understanding Containerization in DevOps

Containerization is a method of virtualization that focuses on isolating individual applications to run within a single operating system. It provides a standardized way to package and distribute software, making it easier to deploy and manage applications across different environments.

In DevOps, containerization has become an essential tool for streamlining the software development lifecycle. With containerization, developers can build applications in a consistent environment, regardless of the underlying operating system or hardware. This allows for faster testing, debugging, and deployment.

Docker containers are the most widely-used containerization technology in DevOps processes. Docker containers are lightweight, portable, and enable applications to be packaged with their dependencies, making them highly efficient and scalable.

Benefits of Containerization in DevOps

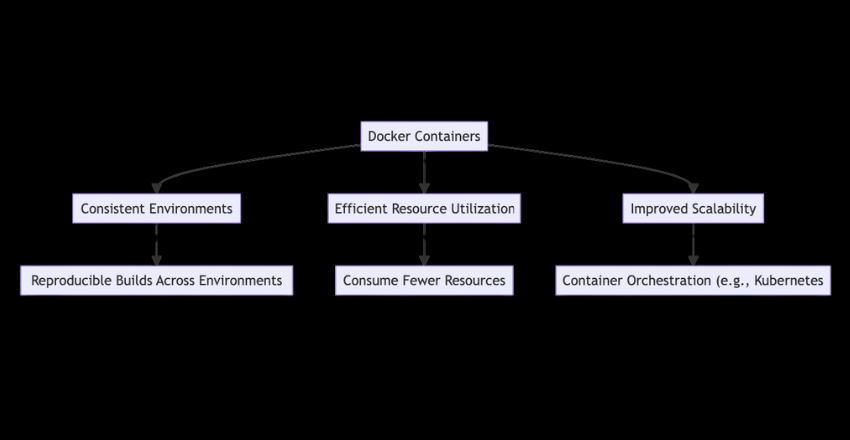

Containerization offers several benefits to DevOps processes, including:

- Consistent Environments: Containerization allows applications to be built and run in a consistent environment, reducing the risk of compatibility issues and streamlining the development process.

- Efficient Resource Utilization: Containers are lightweight and require less overhead than traditional virtualization methods, enabling more efficient use of resources.

- Improved Scalability: Containerization allows for more efficient scaling of applications, as individual containers can be easily replicated and distributed across different environments.

Overall, containerization has become a critical component of DevOps processes, enabling faster, more efficient, and more scalable application development and deployment.

Streamlining DevOps Processes with Docker Containers

Docker containers have become an essential component in DevOps processes, providing developers with a reliable and efficient way to manage application deployments. Containers enable consistent environments, streamline resource utilization, and improve scalability, resulting in faster development cycles and enhanced collaboration among teams.

One of the key benefits of using Docker containers in DevOps processes is the ability to create reproducible builds that remain consistent across environments. This means that developers can easily set up and manage applications without worrying about compatibility issues or software conflicts. Moreover, containers are lightweight and consume fewer resources than traditional virtual machines, allowing developers to optimize infrastructure utilization and reduce costs.

Consistent Environments

Docker containers provide a consistent environment for application development and deployment, allowing developers to avoid the “works on my machine” problem. Containers encapsulate dependencies and configurations, ensuring that applications can run seamlessly on different machines and environments.

With Docker, developers can use the same container configuration in development, testing, and production environments, ensuring that their code runs as expected regardless of the target environment. This helps to minimize the risk of software defects and configuration errors, resulting in higher quality releases and improved customer satisfaction.

Efficient Resource Utilization

Docker containers enable efficient resource utilization, allowing multiple applications to run on a single host machine without interfering with each other. Containers are isolated from the host system, which means that they can run without affecting the behavior of other applications or the operating system.

Containers use less storage and memory than traditional virtual machines, making them a more lightweight and efficient solution for application deployment. This, in turn, reduces infrastructure costs and improves the overall performance of the system.

Improved Scalability

Docker containers provide improved scalability for DevOps processes, allowing developers to quickly and easily scale applications up or down as demand requires. With Docker, developers can easily scale applications across multiple hosts or cloud providers, distributing workload and improving the overall responsiveness of the system.

Container orchestration tools such as Kubernetes make it easy to manage containerized applications at scale, providing a single interface for deploying, scaling, and managing containers across multiple hosts or clusters. This, in turn, enables faster and more efficient DevOps processes and improves the resilience of the system.

Code Example:

To illustrate how Docker containers can streamline DevOps processes, let’s create a simple example that encapsulates the deployment of a web application using Docker. This example demonstrates how to containerize a Python Flask application, ensuring a consistent environment, efficient resource utilization, and improved scalability.

Step 1: Create a Flask Application

First, we’ll create a simple Flask application. Save this code as app.py.

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello_world():

return 'Hello, Docker!'

if __name__ == '__main__':

app.run(debug=True, host='0.0.0.0')Step 2: Define the Dockerfile

Next, we define a Dockerfile to create a Docker image for the Flask application. The Dockerfile specifies the base image, dependencies, and commands to run the application. Save this as Dockerfile.

# Use an official Python runtime as a parent image

FROM python:3.8-slim

# Set the working directory in the container

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir flask

# Make port 5000 available to the world outside this container

EXPOSE 5000

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["python", "app.py"]Step 3: Build and Run the Docker Container

Now, build the Docker image and run it as a container. These commands are executed from the terminal.

# Build the Docker image

docker build -t flask-sample-app .

# Run the Flask app in a Docker container

docker run -p 5000:5000 flask-sample-appExplanation:

- The Dockerfile starts with a lightweight Python image (

python:3.8-slim) as the base image, ensuring that the environment is consistent and that the application has all the necessary Python dependencies. - The application and its dependencies are copied into the Docker container, encapsulating the application’s environment completely.

- By exposing port 5000 and running

app.py, the Flask application becomes accessible outside the Docker container, illustrating efficient resource utilization as the container requires minimal resources. - This setup can be easily scaled using Docker Compose or an orchestration tool like Kubernetes, demonstrating improved scalability.

This example showcases how Docker containers facilitate DevOps processes by ensuring consistent environments across development, testing, and production, optimizing resource utilization, and enhancing scalability. By containerizing applications, developers can avoid compatibility issues, streamline deployments, and focus on delivering high-quality software efficiently.

Overall, Docker containers have become an essential tool for streamlining DevOps processes, providing a reliable and scalable way to manage application deployments. With the right tools and strategies, developers can leverage the power of containers to optimize infrastructure utilization, reduce costs, and accelerate software delivery.

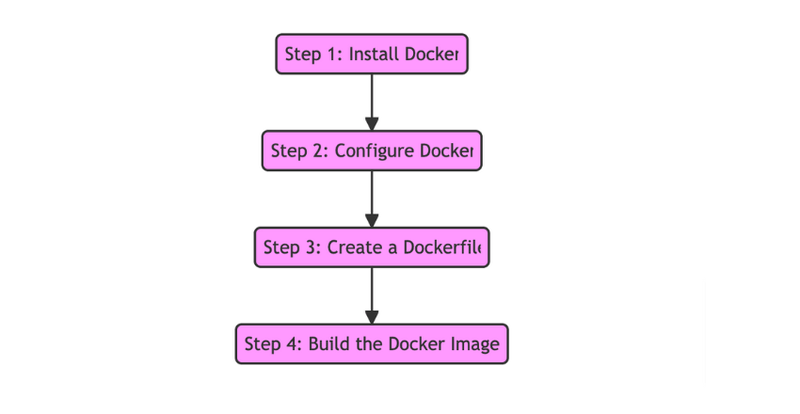

Setting Up Docker Containers

Docker containers provide a powerful and efficient way for DevOps teams to manage applications in development and production environments. Setting up Docker containers is a straightforward process that can be completed in just a few steps.

Step 1: Install Docker

The first step to setting up Docker containers is to install Docker on your system. Docker is compatible with various operating systems, including Windows, Linux, and macOS. You can download the appropriate installer from the official Docker website and follow the installation instructions.

Step 2: Configure Docker

Once Docker is installed, you’ll need to configure it to suit your requirements. The default configuration is usually sufficient for most use cases, but you can customize it depending on your needs.

You can configure Docker by modifying the daemon.json file, which is located in the /etc/docker directory on Linux systems and in the C:\ProgramData\Docker\config directory on Windows systems. The file contains various settings that control the behavior of Docker.

Step 3: Create a Dockerfile

A Dockerfile is a text file that contains instructions for Docker to build an image. The image is a template that can be used to create multiple containers with the same configuration. The Dockerfile specifies the base image, the commands to run, and the files to include in the image.

You can create a Dockerfile in any text editor and save it in the directory where your application code is located. The Dockerfile must be named “Dockerfile” with no file extension.

Step 4: Build the Docker Image

Once you have created the Dockerfile, you can use it to build a Docker image. The image is built by running the “docker build” command in the directory where the Dockerfile is located.

The “docker build” command reads the Dockerfile and executes the instructions to build the image. The final image is stored in the Docker image repository on your system, ready to be used to create containers.

Code Example

The process of setting up Docker involves several key steps, from installation to building your first Docker image. Below is a code example that outlines these steps, focusing on a Linux environment for simplicity. This example guides you through installing Docker, configuring it, creating a Dockerfile, and building a Docker image.

Step 1: Install Docker on Linux

First, update your existing list of packages:

sudo apt-get update

Next, install a few prerequisite packages which let apt use packages over HTTPS:

sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

Then, add the GPG key for the official Docker repository to your system:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

Add the Docker repository to APT sources:

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

Update your package database with the Docker packages from the newly added repo:

sudo apt-get update

Finally, install Docker:

sudo apt-get install docker-ceStep 2: Configure Docker

To configure Docker, you might want to adjust Docker’s settings like default network settings or the storage location for Docker images and containers. For this example, we’ll change the storage driver to overlay2, which is recommended for most use cases.

Edit the daemon.json file. If it doesn’t exist, create it:

sudo nano /etc/docker/daemon.jsonAdd the following configuration:

{

"storage-driver": "overlay2"

}Restart Docker to apply the changes:

sudo systemctl restart dockerStep 3: Create a Dockerfile

Navigate to your project directory, or create a new one:

mkdir mydockerproject && cd mydockerproject

Create a Dockerfile with the following content. This example Dockerfile sets up a simple web server using Python Flask:

# Use an official Python runtime as a base image

FROM python:3.8

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy the current directory contents into the container

COPY . .

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 80 available to the world outside this container

EXPOSE 80

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["python", "app.py"]

Step 4: Build the Docker Image

With your Dockerfile in place, build your Docker image:

docker build -t my-flask-app .This command tells Docker to build an image from the Dockerfile in the current directory with the tag my-flask-app.

By following these steps, you have successfully set up Docker on your system, configured it, created a Dockerfile for a simple application, and built a Docker image ready for deployment. This streamlined process exemplifies the power and simplicity of using Docker for developing, deploying, and running applications.

Setting up Docker containers is a simple process that can be accomplished in just a few steps. By following these steps, you can create a containerized environment that is consistent, efficient, and scalable.

Managing Docker Containers

Once you have set up your Docker containers, it is important to effectively manage them to ensure their proper functioning and optimal performance. Here are some tools and techniques for managing Docker containers:

Container Orchestration

Container orchestration platforms such as Kubernetes and Docker Swarm enable you to manage and automate the deployment, scaling, and monitoring of containerized applications. These platforms allow you to define and manage containerized workloads, services, and networks, and provide visibility and control over your entire container environment.

Monitoring

Proper monitoring of Docker containers is crucial for identifying and addressing issues before they impact your applications. Tools such as Prometheus, Grafana, and Datadog allow you to track container metrics, logs, and events, and provide actionable insights into your container environment.

Scaling Strategies

Efficient scaling of Docker containers is essential for ensuring the availability and performance of your applications. Horizontal scaling, which involves adding more container instances to distribute the workload, and vertical scaling, which involves increasing the resources allocated to containers, are common scaling strategies for Docker containers. Auto-scaling platforms such as Amazon EC2 Auto Scaling and Google Kubernetes Engine Autoscaler enable you to automatically adjust the number of container instances based on demand.

Effective management of Docker containers is crucial for delivering high-quality applications and services. With the right tools and techniques, you can maintain optimal performance and scalability of your container environment.

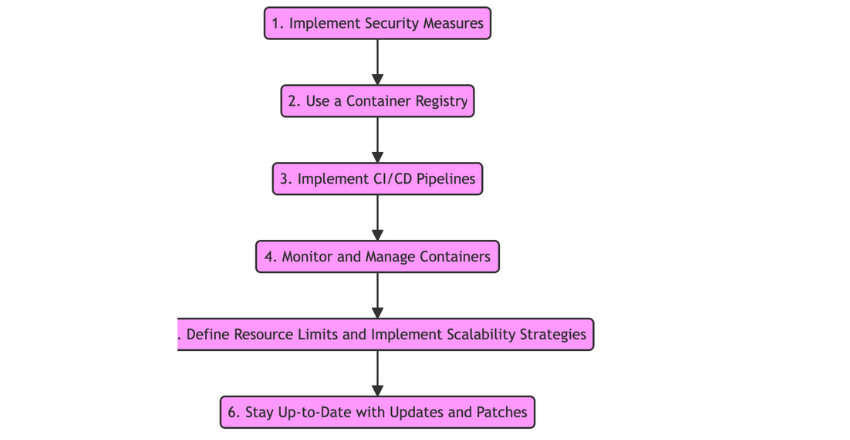

Best Practices for Docker Deployment

Deploying Docker containers in production environments requires careful planning and attention to detail. Below, we outline best practices for successful Docker deployment.

1. Implement Security Measures

Security should be a top priority when deploying Docker containers. It is critical to ensure that containers run only with necessary permissions and resources and use secure network configurations. Follow security best practices such as using authentication, encryption, and limiting access to sensitive data.

2. Use a Container Registry

A container registry is a central location for storing and distributing Docker images. It enables version control, management, and efficient distribution of images. Use a container registry for storing and sharing images, and ensure that it is secured with authentication and access control.

3. Implement Continuous Integration/Continuous Deployment (CI/CD) Pipelines

CI/CD pipelines automate the process of building, testing, and deploying code changes. This enables faster and more reliable software delivery, reducing the risk of errors and downtime. Use a tool such as Jenkins or GitLab for implementing CI/CD pipelines.

4. Monitor and Manage Containers

Monitoring containers is critical for identifying and addressing performance, security, and availability issues. Use Docker monitoring tools such as Prometheus, Grafana or Datadog to monitor containers and their associated metrics. Use container orchestration platforms such as Kubernetes, Docker Swarm, or Amazon ECS to manage containers at scale.

5. Define Resource Limits and Implement Scalability Strategies

Docker containers consume resources such as CPU, memory, and disk. To ensure optimal performance and prevent resource depletion, define resource limits for containers. Implement scaling strategies such as load balancing and auto-scaling to ensure that the application can handle fluctuating traffic demands.

6. Stay Up-to-Date with Updates and Patches

Regularly update Docker images, operating systems, and other components to ensure that they are secure and stable. This helps to prevent security vulnerabilities and compatibility issues.

By following these best practices, you can ensure that your Docker containers are deployed securely, efficiently, and reliably in production environments.

Examples of Docker Containerization in DevOps

These examples illustrate the versatility and wide-ranging applications of containerization.

Finance

The finance industry has a wealth of sensitive data to manage, making security a top priority. Docker containers allow financial institutions to compartmentalize and secure their applications, reducing the risk of data breaches. For example, JP Morgan Chase uses Docker containers to run their in-house Java applications, improving their development and deployment processes.

Retail

Retailers are constantly striving to provide a seamless customer experience across all channels. Docker containers help retailers manage their applications and streamline their e-commerce processes. Containerization also enables retailers to quickly scale their applications during peak shopping seasons. Walmart, for example, has adopted Docker containers to build and deploy their microservices architecture.

Healthcare

The healthcare industry requires strict regulation and compliance when it comes to patient data. Docker containers provide healthcare organizations with a secure way to manage their applications and data, while also enabling them to respond quickly to changing patient needs.

Kaiser Permanente, one of the largest healthcare providers in the US, uses Docker containers to deploy their applications in a consistent and reproducible manner.

Transportation

The transportation industry is increasingly reliant on technology to manage their operations. Docker containers provide transportation companies with a way to modernize their applications and improve their efficiency. Containerization also allows them to deploy applications in a uniform and standardized way. Lyft, for example, has adopted Docker containers to deploy their microservices architecture and improve their overall development process.

Media and Entertainment

The media and entertainment industry requires flexible and scalable application management to keep up with changing audience demands. Docker containers enable media companies to deploy and manage their applications across a variety of platforms and devices. Containerization also helps them to build and test their applications faster.

Netflix, for example, uses Docker containers to manage their microservices architecture and ensure smooth and reliable streaming for their customers.

These examples demonstrate the ways in which Docker containers are transforming the business landscape and making DevOps processes more efficient and effective. By embracing containerization, companies across a wide range of industries can streamline their application management and harness the full potential of modern technology.

Overcoming Challenges and Troubleshooting Tips

While Docker containers offer many benefits in DevOps processes, they can also present some challenges that need to be addressed. Here are some common issues and tips for efficient problem-solving:

Challenge 1: Container Networking

One of the most common challenges with Docker containers is networking. Containers may have difficulty communicating with one another or with external resources. To address this issue, it’s important to understand the network architecture of your containers and ensure that their connectivity is properly configured.

Tip 1:

Use container orchestration tools such as Kubernetes or Docker Swarm to manage container networking and ensure optimal connectivity.

Challenge 2: Container Performance

Docker containers can sometimes suffer from performance issues, particularly if they are running resource-intensive applications or are misconfigured.

Tip 2:

Monitor container performance regularly and adjust resource allocation as needed. Use container management tools to ensure efficient resource utilization.

Challenge 3: Container Security

Container security is a critical concern, as containers can be vulnerable to attacks if not properly secured.

Tip 3:

Implement best practices for container security, such as using secure images, limiting container privileges, and monitoring container activity for suspicious behavior.

Challenge 4: Container Storage

Storing and managing container data can be a challenge, particularly if you are using a large number of containers or dealing with a large amount of data.

Tip 4:

Use container storage management tools such as Docker Volume or Kubernetes Persistent Volumes to simplify container data management. Consider using cloud-based storage solutions for scalability and flexibility.

By understanding and addressing these common challenges, you can ensure that your Docker containers are running smoothly and effectively supporting your DevOps processes.

Final Thoughts

Docker containers have revolutionized the way DevOps processes are carried out, providing efficient and streamlined approaches to application development and deployment. Containerization in DevOps with Docker containers has enabled consistent environments, efficient resource utilization, and improved scalability.

External Resources

FAQ

FAQ 1: How to List All Running Docker Containers?

To list all currently running Docker containers, you can use the docker ps command. This command provides information about the containers, including container ID, image name, creation time, and status.

Code Sample:

docker psIf you want to see all containers, including stopped ones, use the docker ps -a command:

docker ps -aThis command is particularly useful for troubleshooting and managing container lifecycles.

FAQ 2: How to Stop and Remove a Docker Container?

Stopping a Docker container gracefully shuts it down, and removing it deletes it from your system. First, you need to stop the container using docker stop, followed by the container ID or name. Then, you can remove it using docker rm.

Code Sample:

To stop a container:

docker stop <container_id_or_name>

To remove a container:

docker rm <container_id_or_name>For a more efficient workflow, if you’re certain you want to stop and then remove a container, you can use the -f flag with docker rm to force removal without stopping it first:

docker rm -f <container_id_or_name>FAQ 3: How to Access the Shell of a Running Docker Container?

Accessing the shell of a running Docker container is often necessary for debugging, monitoring, or configuring the containerized application. The docker exec command allows you to run a command in a running container, which for shell access, is typically a shell binary like /bin/bash or /bin/sh.

Code Sample:

To access the bash shell of a container:

docker exec -it <container_id_or_name> /bin/bashIf the container does not have bash, you might use /bin/sh instead:

docker exec -it <container_id_or_name> /bin/shThe -it flags attach your terminal input and output to the container, providing an interactive shell session.

These FAQs and code samples address common operations and tasks that developers and system administrators perform with Docker containers, helping to manage and interact with containers effectively.

James is an esteemed technical author specializing in Operations, DevOps, and computer security. With a master’s degree in Computer Science from CalTech, he possesses a solid educational foundation that fuels his extensive knowledge and expertise. Residing in Austin, Texas, James thrives in the vibrant tech community, utilizing his cozy home office to craft informative and insightful content. His passion for travel takes him to Mexico, a favorite destination where he finds inspiration amidst captivating beauty and rich culture. Accompanying James on his adventures is his faithful companion, Guber, who brings joy and a welcome break from the writing process on long walks.

With a keen eye for detail and a commitment to staying at the forefront of industry trends, James continually expands his knowledge in Operations, DevOps, and security. Through his comprehensive technical publications, he empowers professionals with practical guidance and strategies, equipping them to navigate the complex world of software development and security. James’s academic background, passion for travel, and loyal companionship make him a trusted authority, inspiring confidence in the ever-evolving realm of technology.