Implement DevOps to transform your software development lifecycle, ensuring faster deployments, improved efficiency, and unparalleled collaboration across teams.

Whether you are just starting with DevOps or looking to enhance your current practices, this article will provide valuable insights and guidance.

So, let’s dive into the world of how to implement DevOps and achieve successful DevOps adoption.

Understanding DevOps

DevOps is a set of principles and practices focused on breaking down silos between development and operations teams, fostering collaboration, and promoting continuous delivery of high-quality software. At its core, DevOps is a culture that emphasizes shared responsibility, trust, and continuous improvement.

DevOps principles are rooted in Agile software development methodologies, and they emphasize the importance of collaboration, communication, and feedback loops. Collaboration in DevOps involves bringing together cross-functional teams, including developers, operations personnel, and other stakeholders, to work towards a common goal and achieve faster time-to-market.

DevOps culture requires an environment that encourages experimentation, innovation, and learning. It is a mindset that values transparency, accountability, and continuous self-reflection. By adopting a DevOps culture, organizations can create a more agile and productive work environment that supports business growth and innovation.

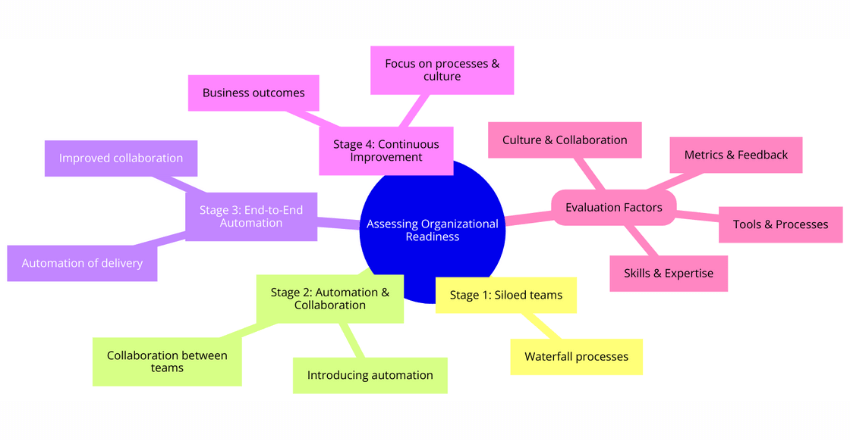

Assessing Organizational Readiness

Before embarking on the DevOps journey, it is essential to assess your organization’s readiness for DevOps implementation. The DevOps maturity model offers a framework for evaluating your organization’s current state of processes, tools, and culture.

The DevOps maturity model defines four stages of DevOps adoption:

| Stage | Description |

|---|---|

| Stage 1 | Siloed teams working in traditional waterfall processes |

| Stage 2 | Introducing some level of automation and collaboration between teams |

| Stage 3 | Automation of end-to-end delivery processes and improved collaboration between teams |

| Stage 4 | Continuous improvement of processes and culture, with a focus on business outcomes |

To evaluate your organization’s readiness, consider the following factors:

- Culture and collaboration: Is your organization’s culture supportive of DevOps practices, and do teams collaborate effectively across departments?

- Tools and processes: Are your existing tools and processes suited to DevOps, or will they need to be updated or replaced?

- Skills and expertise: Do your teams have the necessary skills and experience to work in a DevOps environment, or will training be required?

- Metrics and feedback: Does your organization have a culture of measuring and continuously improving processes, and is there a feedback loop to make necessary changes?

Evaluating your organization’s readiness for DevOps implementation can help you identify areas that need improvement and set a roadmap for successful adoption.

Building a DevOps Team

With a solid understanding of DevOps principles, it’s time to assemble a team that can implement them successfully. A truly effective DevOps team must be cross-functional, consisting of individuals with skills and expertise across development, operations, and other relevant areas.

When assembling a DevOps team, it’s important to consider the following roles:

| Role | Description |

|---|---|

| DevOps Engineer | Responsible for designing, implementing, and maintaining the DevOps pipeline. |

| Developer | Responsible for writing and testing code. |

| Operations Engineer | Responsible for managing infrastructure and ensuring system availability and reliability. |

| Quality Assurance Engineer | Responsible for testing and ensuring the quality of the product. |

Each of these roles is critical to the success of the DevOps team. It’s important to choose team members who are collaborative, communicative, and willing to learn and adapt to new tools and processes.

Establishing a collaborative environment is also key to building a successful DevOps team. This means breaking down silos between departments and fostering effective communication and teamwork.

This can be achieved through regular meetings, such as daily stand-ups, and by incorporating tools that facilitate collaboration, such as chat platforms and project management software.

Assembling a cross-functional DevOps team may seem challenging, but doing so can result in a more efficient and effective development process. With the right team in place, implementing DevOps practices becomes much more manageable.

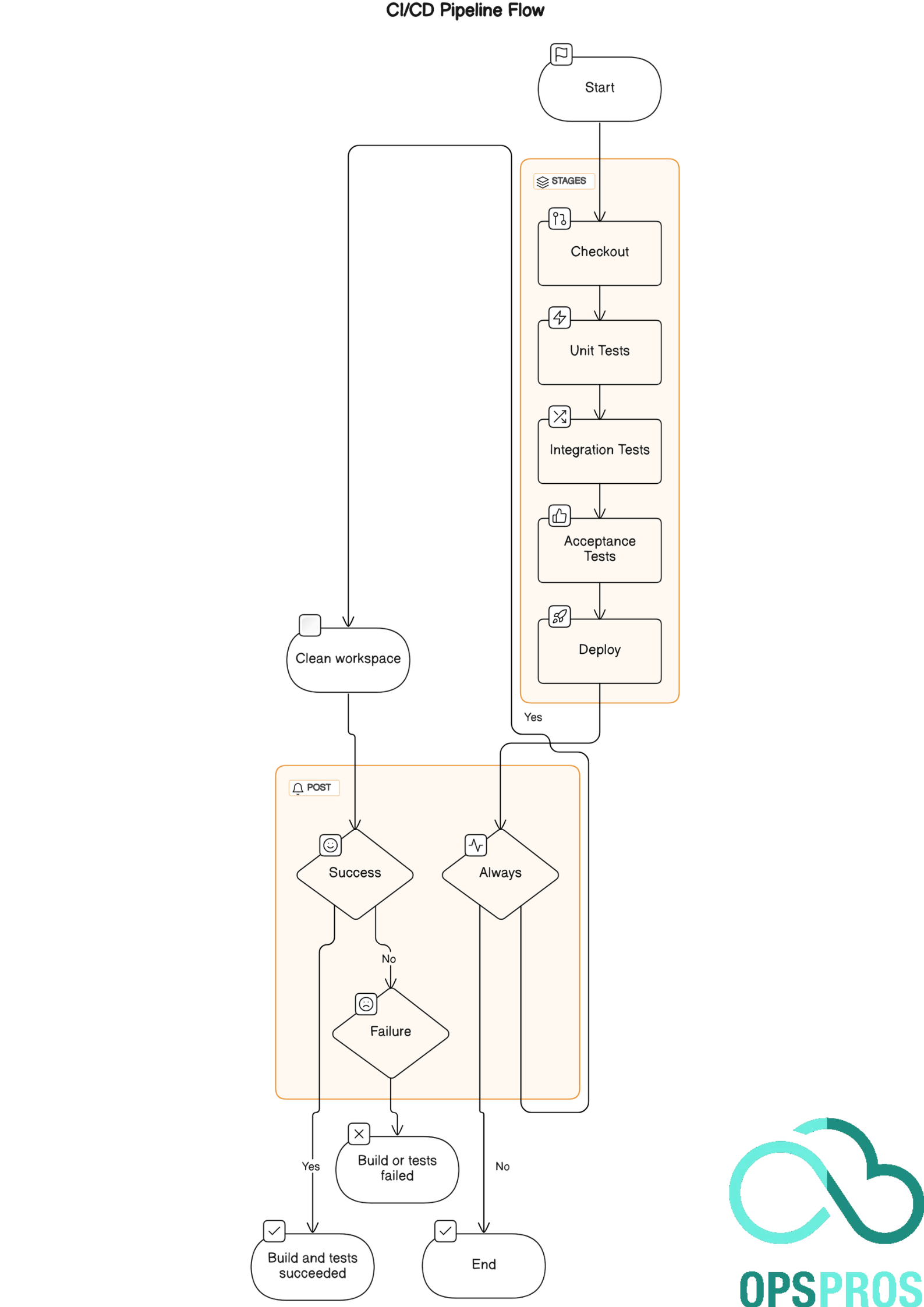

Establishing a Continuous Integration (CI) Pipeline

Implementing a continuous integration (CI) pipeline requires automating the build and test processes to ensure that code changes are tested thoroughly before they are integrated. A well-designed CI pipeline delivers numerous benefits, including reduced software defects, faster time-to-market, and improved collaboration between development and operations teams.

Here are some best practices to follow when creating a CI pipeline:

Automate everything: Automate as much of the testing process as possible, including the running of unit tests, integration tests, and acceptance tests. Automated testing speeds up the build process and provides fast feedback to developers on code quality.

Example

sample code using a popular CI/CD tool, Jenkins, along with a general setup for a project using Maven as the build tool. This setup will automate unit tests, integration tests, and acceptance tests.

Jenkinsfile (Jenkins Pipeline)

This Jenkinsfile is used to define the CI/CD pipeline in Jenkins. It will automate the execution of unit tests, integration tests, and acceptance tests whenever a new commit is pushed to the repository.

pipeline {

agent any

stages {

stage('Checkout') {

steps {

// Checks out the source code

checkout scm

}

}

stage('Unit Tests') {

steps {

// Run unit tests using Maven

script {

def mvnHome = tool 'Maven3'

sh "${mvnHome}/bin/mvn clean test"

}

}

}

stage('Integration Tests') {

steps {

// Run integration tests. This assumes integration tests are triggered with a specific profile or goal

script {

def mvnHome = tool 'Maven3'

sh "${mvnHome}/bin/mvn verify -PintegrationTests"

}

}

}

stage('Acceptance Tests') {

steps {

// Run acceptance tests. This might involve a different tool or approach depending on your setup

// For demonstration, using Maven with a hypothetical acceptance test profile

script {

def mvnHome = tool 'Maven3'

sh "${mvnHome}/bin/mvn verify -PacceptanceTests"

}

}

}

stage('Deploy') {

steps {

// Deploy your application to a staging or production environment

// This is typically done only after all tests pass

sh './deploy-script.sh'

}

}

}

post {

always {

// Clean up workspace after build

cleanWs()

}

success {

// Actions to take if the pipeline succeeds

echo 'Build and tests succeeded!'

}

failure {

// Actions to take if the pipeline fails

echo 'Build or tests failed.'

}

}

}Maven Configuration for Integration and Acceptance Tests

In your pom.xml, you can separate unit tests from integration and acceptance tests using profiles or by adhering to naming conventions. Maven Failsafe Plugin is often used for integration tests, while Surefire Plugin is used for unit tests.

Here’s how you might configure them:

<profiles>

<profile>

<id>integrationTests</id>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-failsafe-plugin</artifactId>

<version>2.22.2</version>

<executions>

<execution>

<goals>

<goal>integration-test</goal>

<goal>verify</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</profile>

<profile>

<id>acceptanceTests</id>

<!-- Configuration for acceptance tests -->

<!-- This could involve setting up additional plugins or dependencies -->

</profile>

</profiles>This setup assumes you’re familiar with Jenkins and Maven. The Jenkinsfile defines a multi-stage pipeline that automates the entire process, from pulling the latest code to executing different types of tests, and finally, to deployment.

The Maven configuration snippet demonstrates how to separate different types of tests using profiles.

Keep builds fast: The quicker the build, the more frequently the code can be integrated, and the faster it can be tested. CI pipelines should be designed to complete in minutes or less, allowing developers to test changes frequently.

Use version control: Version control tools like Git allow developers to track changes to the codebase, and ensure that all team members are working on the most up-to-date version of the code.

Implement continuous feedback: CI pipelines should provide real-time feedback to developers on the code’s overall health, including tests passed, code coverage, and other metrics.

Maintain a clean build environment: Ensure that all build dependencies are available and tested in a clean environment. This helps ensure that the build is reproducible and the results can be trusted.

By implementing these best practices, a well-designed CI pipeline will help to detect problems early in the development process, reduce waste, and improve overall code quality.

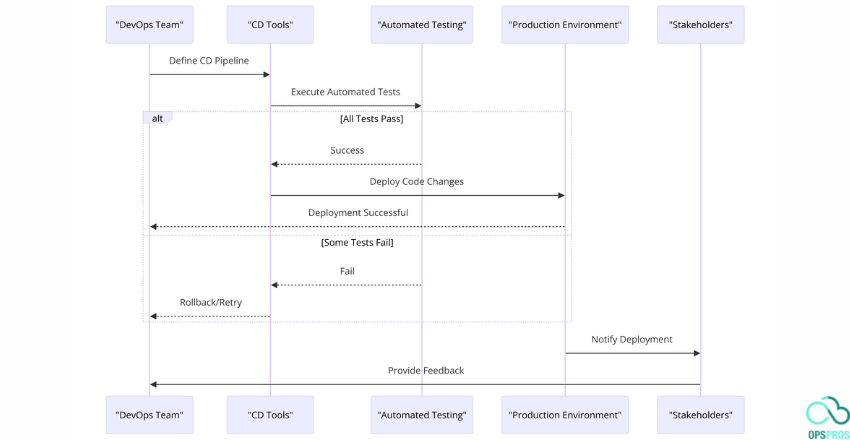

Implementing Continuous Delivery (CD)

Building on the previous section, CD is an essential part of DevOps implementation. It is the practice of automating the deployment process, from testing to production, ensuring that new code changes can be released quickly and reliably.

Automated deployment is a key component of CD, allowing teams to deploy code changes with a push of a button and reducing the risk of human error.

To begin implementing CD, it is essential to have a well-defined and automated process for testing and building code changes. Once code changes pass the testing phase, they can be automatically deployed to production, using a CD pipeline.

CD Pipeline

A CD pipeline is a series of automated steps that code changes go through before being deployed to production. CD pipelines not only automate the deployment process but also provide visibility into the status of code changes, making it easier for teams to collaborate and troubleshoot issues.

Automated deployment relies on a variety of tools and technologies, such as configuration management tools, containerization platforms, and automated testing frameworks. These tools help ensure that the necessary infrastructure is in place, and the deployed code is stable and secure.

Automated Deployment

The goal of automated deployment is to minimize the cost, time, and effort required to deploy code changes to production. Automated deployment ensures that environments are consistent, secure, and up-to-date, reducing the likelihood of deployments to fail or cause downtime.

Automated deployment also allows for easy rollback to a previous version in the event of a problem. By automating the entire process, teams can easily deploy and rollback code changes without needing to spend time and resources on manual processes.

Release Management

Effective release management is crucial to ensure that code changes are released reliably and with minimal downtime. Release management involves coordinating the deployment of code changes across different teams, communicating changes to stakeholders, and documenting the changes that have been made.

Teams can leverage automation tools to manage releases, such as using version control systems to track changes and rollbacks or using release management software to track progress and manage tasks.

Release management should be an ongoing process, with teams constantly reviewing and improving their release management practices to ensure success.

Infrastructure as Code (IaC)

Automating infrastructure can help DevOps teams create consistent, repeatable, and reliable environments. IaC is the practice of managing infrastructure through machine-readable code, allowing teams to version, test, and deploy infrastructure with the same agility as code.

There are several IaC tools available, such as Terraform, Ansible, and CloudFormation, each with its unique features and strengths.

Terraform, for example, is a tool that allows users to define infrastructure in a declarative way and supports multiple cloud platforms, while Ansible is an agentless tool that uses SSH to manage and configure servers.

A basic example using both Terraform and Ansible.

These examples show how you might begin to automate the provisioning of a virtual machine on AWS with Terraform and then configure the software on that VM with Ansible.

provider "aws" {

region = "us-west-2"

}

resource "aws_instance" "example" {

ami = "ami-0c55b159cbfafe1f0" # Example AMI ID; replace with a valid one

instance_type = "t2.micro"

tags = {

Name = "ExampleInstance"

}

}Before running Terraform scripts, initialize your Terraform workspace with terraform init, apply your configuration with terraform apply, and confirm the action.

Ansible Example: Configuring the EC2 Instance

After provisioning your infrastructure, use Ansible to configure your server. This example Ansible playbook installs Nginx on the newly created EC2 instance. Ensure you have Ansible installed and that you can access your EC2 instance via SSH.

File: playbook.yml

- hosts: all

become: yes # Required to gain root privileges

tasks:

- name: Update apt-get repo and cache

apt:

update_cache: yes

cache_valid_time: 3600 # One hour

- name: Install Nginx

apt:

name: nginx

state: present

- name: Start Nginx

service:

name: nginx

state: started

enabled: yesTo run this playbook, you’d typically create an inventory file with the IP addresses of your EC2 instances, then execute the playbook using the ansible-playbook command, specifying your inventory.

Running the Playbook:

ansible-playbook -i inventory.ini playbook.ymlInventory File Example (inventory.ini):

[webservers]

your_ec2_instance_ip ansible_user=ec2-user ansible_ssh_private_key_file=/path/to/your/key.pemReplace your_ec2_instance_ip with the public IP address of your EC2 instance and /path/to/your/key.pem with the path to your SSH key for AWS.

Integrating with CI/CD

For both Terraform and Ansible, integrating these tools into a CI/CD pipeline involves adding steps in your pipeline configuration to run these scripts automatically. This setup could involve:

- Terraform: Running

terraform planandterraform applywithin a CI/CD job to deploy or update infrastructure. - Ansible: Triggering Ansible playbooks post-infrastructure deployment to configure or update server software.

Both should be integrated with version control (like Git) for tracking changes and enabling collaboration. Always ensure sensitive information (like AWS credentials or SSH keys) is securely managed, using secrets management tools provided by your CI/CD platform or third-party services.

Continuous integration systems can be used to test infrastructure code before merging it to the main branch, ensuring that code changes do not introduce bugs or vulnerabilities.

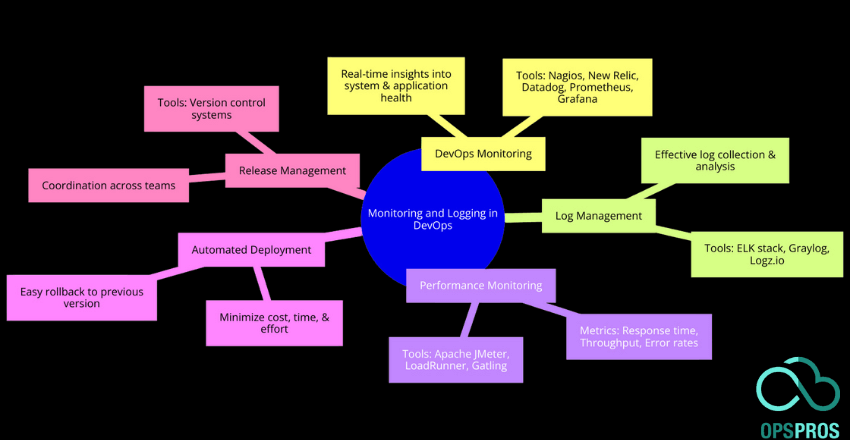

Monitoring and Logging in DevOps

In a DevOps environment, monitoring and logging are critical for ensuring the availability, performance, and reliability of applications and infrastructure. Effective monitoring tools and techniques can help identify issues before they impact users and allow teams to respond quickly to incidents.

Effective logging practices can help with troubleshooting and incident response, providing valuable insights into the root cause of issues.

DevOps monitoring:

There are many monitoring tools available in the market, each with its own strengths and weaknesses. It is important to choose tools that align with the needs of your organization and support your DevOps implementation.

Some popular monitoring tools in the DevOps ecosystem include Nagios, New Relic, Datadog, Prometheus, and Grafana. These tools provide real-time insights into system and application health, enabling proactive issue resolution.

Log management:

Logging is an essential practice for any DevOps team. By collecting and analyzing logs, teams can gain valuable insights into how applications are performing and where issues are occurring. Effective log management tools and practices can help with debugging, auditing, and compliance.

Some popular log management tools in the DevOps ecosystem include ELK stack, Graylog, and Logz.io. These tools can be used to collect, visualize, and analyze log data from across the organization.

Performance monitoring:

Performance monitoring is critical for ensuring that applications are meeting performance expectations. This includes monitoring metrics such as response time, throughput, and error rates.

By collecting and analyzing performance metrics, teams can identify performance bottlenecks and optimize application performance.

Some popular performance monitoring tools in the DevOps ecosystem include Apache JMeter, LoadRunner, and Gatling. These tools can be used to simulate user traffic and measure performance under different conditions.

Monitoring and logging are essential practices that help ensure the success of a DevOps implementation. By selecting the right tools and implementing effective practices, teams can proactively identify and resolve issues, optimize performance, and continually improve their DevOps pipeline.

Security and Compliance in DevOps

DevOps is not just about speed and efficiency; it’s also about security and compliance. As a result, teams must adopt a “security-first” mindset to ensure that their pipeline is secure, and their releases meet compliance standards.

This is where the concept of DevSecOps comes into play. DevSecOps is the integration of security practices throughout the DevOps process, ensuring that security is embedded in every stage of the development lifecycle.

Secure DevOps practices involve implementing security controls early on in the development process, using code analysis tools to identify vulnerabilities and misconfigurations, and incorporating automated security checks into the pipeline. Additionally, developers must be trained to write secure code and follow secure coding practices.

Compliance automation is also critical in DevOps. Compliance is an ongoing process that involves meeting regulatory requirements, ensuring that security policies are enforced, and tracking changes made to applications and infrastructure.

Organizations should implement compliance automation tools that help them track and manage compliance-related tasks, assess their compliance posture, and automate audit reporting.

By integrating security and compliance into the DevOps process, teams can mitigate risks and reduce the likelihood of security breaches and compliance violations. This enables them to deliver secure and compliant applications at a faster pace, without compromising on quality.

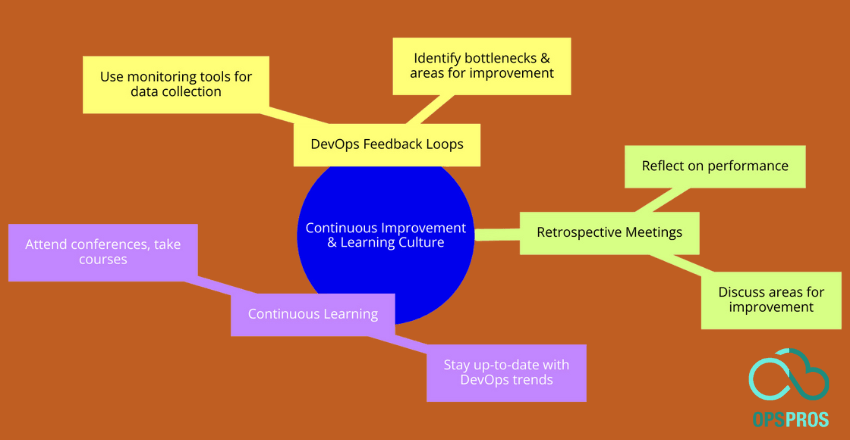

Continuous Improvement and Learning Culture

One of the key aspects of DevOps implementation is continuous improvement and learning. DevOps is a process that requires constant iteration and refinement to ensure that it is delivering the desired outcomes.

To achieve this, DevOps teams need to establish feedback loops and retrospective meetings to gather insights and identify areas for improvement.

DevOps Feedback Loops

Feedback loops are an essential component of DevOps implementation. They provide valuable insights into the effectiveness of the DevOps pipeline and help teams to identify bottlenecks and areas for improvement.

For example, teams can use monitoring tools to collect data on application and infrastructure performance and use this data to identify issues and optimize the pipeline.

Retrospective Meetings

Retrospective meetings are another critical element of DevOps implementation. These meetings provide an opportunity for the team to reflect on their performance and identify areas for improvement.

During the retrospective meeting, the team can discuss what worked well and what didn’t work well during the previous iteration. They can then use this information to adjust their processes and improve their performance moving forward.

Continuous Learning

Another vital element of DevOps implementation is continuous learning. DevOps is a rapidly evolving field, and teams need to continuously learn and adapt to stay competitive. This may involve attending conferences, taking courses, or simply staying up-to-date with the latest DevOps trends and best practices.

External Resources

https://www.microfocus.com/en-us/products/loadrunner-professional/overview

FAQ

Implementing DevOps practices within an organization involves a blend of cultural philosophies, tools, and practices that aim to increase an organization’s ability to deliver applications and services at high velocity.

Below are three frequently asked questions about implementing DevOps, along with answers and code samples where applicable.

1. How do I start implementing DevOps in my organization?

Answer:

Implementing DevOps starts with a cultural shift within the organization, emphasizing collaboration, communication, and integration among IT professionals and software developers. Begin by:

- Assessing your current state: Understand your current development, deployment processes, and where bottlenecks or inefficiencies lie.

- Set clear goals: Define what success looks like for your DevOps initiative, such as faster deployment times, improved deployment frequency, or reduced failure rate of new releases.

- Choose the right tools: Invest in tools that automate manual processes, enhance collaboration, and ensure continuous integration and continuous delivery (CI/CD).

- Educate and Train Your Team: Ensure your team understands DevOps principles and is comfortable using the tools and processes that you will implement.

- Implement Gradually: Start with small, manageable projects to integrate DevOps practices and tools, then scale up as you gain confidence and experience.

Code Sample:

While DevOps is more about practices and culture, tools like Jenkins can be instrumental in implementing CI/CD. Here’s an example Jenkinsfile for a simple CI/CD pipeline:

pipeline {

agent any

stages {

stage('Build') {

steps {

// Use your build tool here. For example, Maven:

sh 'mvn clean package'

}

}

stage('Test') {

steps {

// Run tests here. For instance, with Maven:

sh 'mvn test'

}

}

stage('Deploy') {

steps {

// Deploy your application

// This could be to a staging environment or production

sh './deploy-to-prod.sh'

}

}

}

}2. How do I ensure continuous integration and continuous delivery (CI/CD) in DevOps?

Answer:

Continuous Integration (CI) and Continuous Delivery (CD) are cornerstone practices in DevOps, ensuring that code changes are automatically built, tested, and prepared for a release to production. To ensure CI/CD:

- Implement a CI/CD tool: Use tools like Jenkins, GitLab CI/CD, or CircleCI to automate the integration and delivery processes.

- Version Control: Ensure all code and configuration files are stored in a version control system like Git.

- Automate Testing: Automate your testing process to run with every code change.

- Infrastructure as Code (IaC): Use IaC tools like Terraform or Ansible to manage and provision infrastructure through code.

- Monitor and Feedback: Implement monitoring tools to track application performance and user feedback to inform future development.

Code Sample:

Here is a simple GitLab CI/CD pipeline example defined in .gitlab-ci.yml:

stages:

- build

- test

- deploy

build_job:

stage: build

script:

- echo "Building the project..."

- make build

test_job:

stage: test

script:

- echo "Running tests..."

- make test

deploy_job:

stage: deploy

script:

- echo "Deploying to production..."

- make deploy3. How can I measure the success of DevOps implementation?

Answer:

Success in DevOps can be measured through a combination of qualitative and quantitative metrics, including:

- Deployment Frequency: How often do you deploy code to production?

- Change Lead Time: The time it takes for a commit to get into production.

- Change Failure Rate: The percentage of deployments causing a failure in production.

- Mean Time to Recovery (MTTR): How quickly you can recover from a failure.

- Culture and Collaboration: Employee satisfaction and the degree of collaboration between teams can also indicate success.

Collecting and analyzing these metrics over time will help you understand the impact of DevOps practices on your organization’s performance and guide continuous improvement.

Implementing DevOps is an iterative process that involves continuous learning and adaptation. These FAQs provide a foundational understanding, but success in DevOps requires ongoing effort and commitment to improvement.

James is an esteemed technical author specializing in Operations, DevOps, and computer security. With a master’s degree in Computer Science from CalTech, he possesses a solid educational foundation that fuels his extensive knowledge and expertise. Residing in Austin, Texas, James thrives in the vibrant tech community, utilizing his cozy home office to craft informative and insightful content. His passion for travel takes him to Mexico, a favorite destination where he finds inspiration amidst captivating beauty and rich culture. Accompanying James on his adventures is his faithful companion, Guber, who brings joy and a welcome break from the writing process on long walks.

With a keen eye for detail and a commitment to staying at the forefront of industry trends, James continually expands his knowledge in Operations, DevOps, and security. Through his comprehensive technical publications, he empowers professionals with practical guidance and strategies, equipping them to navigate the complex world of software development and security. James’s academic background, passion for travel, and loyal companionship make him a trusted authority, inspiring confidence in the ever-evolving realm of technology.