Kubernetes in DevOps acts as the cornerstone for modern software development, providing the framework for continuous innovation and operational excellence.

Kubernetes has become a standard tool for managing containerized applications and is increasingly being integrated into DevOps workflows. Kubernetes provides numerous benefits, including enhanced efficiency, scalability, and reliability in application development and deployment.

Kubernetes in DevOps

Kubernetes has become a popular choice for managing containerized applications at scale in DevOps workflows. It is an open-source platform that automates the deployment, scaling, and management of containerized workloads, making it easier to develop, deploy, and maintain applications.

DevOps is a methodology that emphasizes collaboration and communication between development and operations teams to deliver high-quality software quickly and efficiently.

Kubernetes fits in with this methodology by providing a platform for managing the deployment and scaling of containerized applications across complex environments.

At its core, Kubernetes uses a declarative approach to manage containerized applications. This approach allows developers to define the desired state of their applications and Kubernetes takes care of the rest, ensuring that the desired state is maintained even as the environment evolves.

How Kubernetes fits into the DevOps workflow

Kubernetes is a powerful tool that can help streamline the DevOps workflow by automating many of the tasks associated with deploying and managing containerized applications.

When integrated into the DevOps process, Kubernetes can help to:

- Improve scalability by enabling the deployment and management of large-scale containerized applications.

- Increase reliability by automating many of the manual processes associated with managing applications.

- Speed up time to market by automating the deployment and management of applications.

- Reduce costs by optimizing resource usage and minimizing downtime.

Overall, Kubernetes enables developers and operations teams to work together more efficiently by providing a unified platform for managing containerized applications at scale.

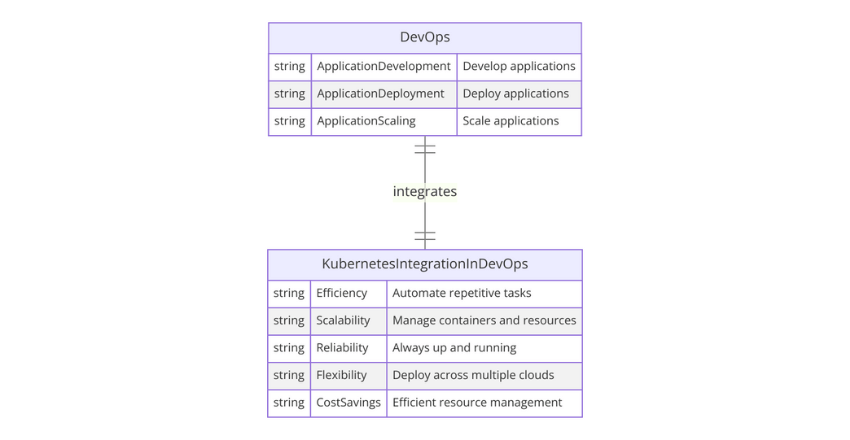

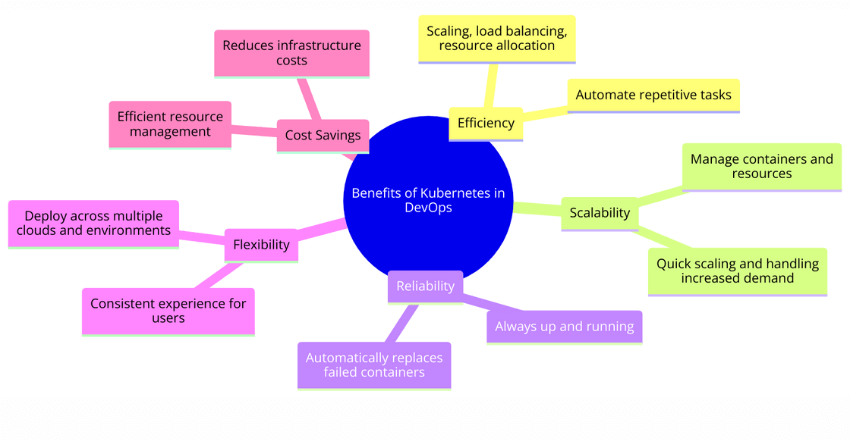

Benefits of Kubernetes in DevOps

Kubernetes integration in DevOps brings a multitude of benefits that streamline application development and deployment workflows. Here are some of the critical advantages of using Kubernetes in DevOps:

Efficiency: Kubernetes enables DevOps teams to automate repetitive tasks, such as scaling, load balancing, and resource allocation, resulting in a more efficient workflow.

Scalability: Kubernetes’s ability to manage containers and resources ensures that applications can scale quickly and handle increased demand.

Reliability: Kubernetes ensures that application instances are always up and running, automatically replacing failed containers and ensuring application uptime.

Flexibility: Kubernetes’s flexibility enables DevOps teams to deploy applications across multiple clouds and environments, providing a consistent experience for users.

Cost Savings: Kubernetes’s efficient resource management reduces infrastructure costs, enabling organizations to scale their applications while keeping costs under control.

Overall, Kubernetes in DevOps enables organizations to develop, deploy, and scale applications efficiently, reliably, and cost-effectively.

Kubernetes Deployment in DevOps

Kubernetes deployment is a critical aspect of DevOps workflows that aims to automate the process of deploying and scaling containerized applications.

Kubernetes provides a robust and scalable framework for managing container orchestration, and its deployment features have revolutionized the way applications are deployed in the cloud.

The deployment process in Kubernetes involves several steps, starting with the creation of a deployment object that defines the desired state of the application. The next step involves the creation of a service object that exposes the application’s pods to the network.

Pod scaling is then achieved through the use of replica sets, which maintain a specified number of identical pods in the cluster.

One of the main advantages of using Kubernetes for deployment is that it provides a high degree of automation, allowing developers to focus on writing code rather than worrying about the underlying infrastructure.

Kubernetes deployment also enables easy scaling of the application to accommodate traffic spikes and ensures fault tolerance through self-healing operations.

Below is a basic example of how you can define a Kubernetes deployment using a YAML configuration file. This example covers the creation of a deployment object, a service to expose it, and illustrates how replica sets work within Kubernetes to manage pod scaling.

This deployment will run a simple web application container, expose it on a specific port, and ensure that three replicas of the pod are running at all times for load balancing and redundancy.

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp-deployment

spec:

replicas: 3

selector:

matchLabels:

app: webapp

template:

metadata:

labels:

app: webapp

spec:

containers:

- name: webapp-container

image: nginx:latest

ports:

- containerPort: 80Breakdown of the YAML:

- Deployment: This section creates a deployment named

webapp-deploymentthat manages the deployment of the web application. It specifies that 3 replicas of the pod should be maintained at all times. - Pod Template: Inside the deployment, the pod template specifies the container to run (in this case,

nginx:latest), along with the port the container exposes (80 in this case). - Service: The service named

webapp-serviceis of typeLoadBalancer, which exposes the web application externally through the cloud provider’s load balancer. It routes traffic to the deployment’s pods on port 80.

Steps to apply this configuration:

- Save the file: Save the above YAML content into a file, e.g.,

webapp-deployment.yaml. - Apply the configuration: Use

kubectlto apply the configuration to your Kubernetes cluster:

kubectl apply -f webapp-deployment.yamlVerify the deployment: Check the status of your deployment and ensure that the pods are running:

kubectl get deployments

kubectl get podsAccess the application: If you’re using a cloud provider, the LoadBalancer service type will provision an external IP to access your application. Use kubectl get services to find the IP and access your application through a web browser.

This example illustrates a straightforward Kubernetes deployment for automating the deployment and scaling of containerized applications, embodying the core advantages of Kubernetes in DevOps workflows.

Kubernetes deployment provides a powerful toolset for managing and scaling containerized applications in a DevOps workflow. Its automation features save time and resources, while its fault tolerance ensures high reliability and availability of the deployed applications.

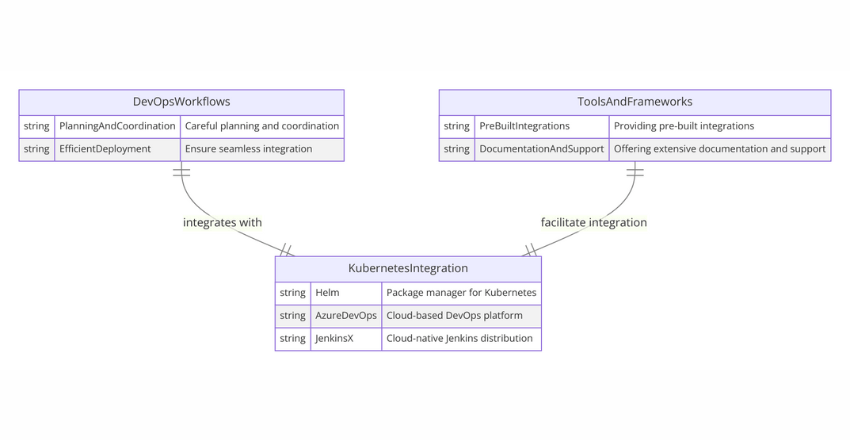

Implementing Kubernetes in DevOps Workflows

Integrating Kubernetes into DevOps workflows requires careful planning and coordination to ensure seamless integration and efficient deployment.

Tools and Frameworks for DevOps with Kubernetes

Several tools and frameworks facilitate the integration of Kubernetes into DevOps workflows. These include:

- Helm: A package manager for Kubernetes that streamlines the deployment of applications and services.

- Azure DevOps: A cloud-based DevOps platform that offers integrated support for Kubernetes and container-based deployments.

- Jenkins X: A cloud-native Jenkins distribution that automates CI/CD pipelines for Kubernetes deployments.

These tools and frameworks simplify the integration of Kubernetes into DevOps workflows by providing pre-built integrations and offering extensive documentation and support.

Integrating Kubernetes into CI/CD Pipelines

Kubernetes can be integrated into CI/CD pipelines to enable fully automated deployments. This integration involves creating a pipeline that deploys new code changes to Kubernetes clusters, runs tests, and scales infrastructure as needed. This can be achieved using tools such as Jenkins X or by creating custom scripts leveraging Kubernetes APIs.

Integrating Kubernetes into CI/CD pipelines significantly enhances the automation, scalability, and reliability of application deployments.

Below is an example of how to create a Jenkins pipeline script (Jenkinsfile) that integrates with Kubernetes to automate the deployment of a Dockerized application.

This example assumes you have Jenkins set up with the Kubernetes plugin and Docker pipeline plugin installed, and that your Jenkins instance has access to your Kubernetes cluster.

Jenkinsfile Example:

pipeline {

agent any

environment {

DOCKER_IMAGE = "myapp:latest"

KUBE_CONFIG = "/path/to/kubeconfig"

DEPLOYMENT_NAME = "myapp-deployment"

NAMESPACE = "default"

}

stages {

stage('Build Docker Image') {

steps {

script {

docker.build(env.DOCKER_IMAGE, '.')

}

}

}

stage('Push Docker Image') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'docker-credentials-id') {

docker.image(env.DOCKER_IMAGE).push()

}

}

}

}

stage('Deploy to Kubernetes') {

steps {

script {

withKubeConfig([credentialsId: 'kube-config', serverUrl: 'https://kubernetes.default.svc']) {

sh "kubectl set image deployment/${env.DEPLOYMENT_NAME} ${env.DEPLOYMENT_NAME}=${env.DOCKER_IMAGE} -n ${env.NAMESPACE}"

}

}

}

}

stage('Integration Test') {

steps {

script {

// Define integration tests here. This could involve running a test suite against your deployed application.

echo "Running integration tests..."

}

}

}

}

post {

always {

echo 'Cleaning up...'

// Implement any cleanup tasks here

}

}

}Explanation:

- Build Docker Image: This stage builds the Docker image for the application using the Dockerfile in the root of the project.

- Push Docker Image: After building the image, it’s pushed to a Docker registry. Ensure you replace

'docker-credentials-id'with the ID of your Docker Hub credentials stored in Jenkins. - Deploy to Kubernetes: Updates the Kubernetes deployment with the new Docker image. This requires a

kube-configcredential configured in Jenkins, which contains the Kubernetes cluster access configuration. - Integration Test: A placeholder for running integration tests against the deployed application. This is crucial for ensuring the application runs correctly in the Kubernetes environment.

Setting Up:

- Jenkins Configuration: Ensure Jenkins is configured with necessary plugins (Kubernetes, Docker pipeline) and has credentials set up for both Docker Hub and Kubernetes cluster access.

- Kubernetes Access: The

kubectlcommand requires access to your Kubernetes cluster. This is achieved by using a kubeconfig file with the appropriate credentials, which should be securely added to Jenkins. - Docker Registry Credentials: These credentials allow Jenkins to push the built image to your Docker registry. Set these up in Jenkins’ credentials store.

This example pipeline demonstrates a basic CI/CD flow, integrating Kubernetes to automate the deployment process.

It highlights the power of combining Jenkins with Kubernetes for continuous integration and delivery, showcasing how DevOps practices can be enhanced with effective tooling and automation.

To ensure successful Kubernetes integrations in DevOps workflows, teams should prioritize the following:

- Configurability: Ensure that the deployment pipeline can be customized to suit the specific needs of the project.

- Scalability: Ensure that the pipeline scales as the application grows and there is no performance degradation.

- Interoperability: Ensure that the pipeline can seamlessly work with other tools and frameworks used in the DevOps workflow.

By leveraging tools and frameworks and following best practices for integration, organizations can attain the full benefits of Kubernetes in DevOps workflows.

Kubernetes Orchestration in DevOps

One of the critical roles of Kubernetes in DevOps workflows is orchestration. It enables the seamless coordination of containers, services, and infrastructure, ensuring efficient resource management and optimization.

Kubernetes offers several features that make it an ideal tool for orchestration, including auto-scaling, load balancing, and self-healing capabilities. With auto-scaling, Kubernetes can automatically adjust the number of replicas of a particular deployment based on the workload.

Load balancing ensures that traffic is distributed efficiently across multiple containers, while self-healing capabilities enable Kubernetes to replace failed containers automatically.

The orchestration capabilities of Kubernetes also extend to managing stateful applications, which require more extensive coordination and management.

Kubernetes allows for the deployment of stateful applications such as databases reliably and efficiently, ensuring that data is stored and retrieved consistently.

Furthermore, Kubernetes enables the deployment of microservices, which are modular and independent components of an application. These microservices can be managed independently, enabling teams to update and make changes to individual services without affecting the entire application.

Example

To illustrate the orchestration capabilities of Kubernetes in a DevOps context, let’s look at a code sample that demonstrates some of these features, such as auto-scaling, load balancing, and the management of stateful applications through a StatefulSet.

This example will also touch upon deploying a microservice architecture.

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-service-deployment

spec:

replicas: 3

selector:

matchLabels:

app: web-service

template:

metadata:

labels:

app: web-service

spec:

containers:

- name: web-service-container

image: nginx:alpine

ports:

- containerPort: 80

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: web-service-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: web-service-deployment

minReplicas: 3

maxReplicas: 10

targetCPUUtilizationPercentage: 80

---

apiVersion: v1

kind: Service

metadata:

name: web-service

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 80

selector:

app: web-service

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: db-statefulset

spec:

serviceName: "db"

replicas: 3

selector:

matchLabels:

app: db

template:

metadata:

labels:

app: db

spec:

containers:

- name: db-container

image: postgres:latest

ports:

- containerPort: 5432

env:

- name: POSTGRES_PASSWORD

value: "yourpassword"Breakdown of the YAML:

- Deployment: Defines a deployment for a web service with 3 replicas of an Nginx container, demonstrating the deployment of a stateless application component.

- HorizontalPodAutoscaler (HPA): Automatically scales the number of web service pods between 3 to 10 based on CPU utilization, showcasing auto-scaling.

- Service (LoadBalancer): Exposes the web service externally, providing load balancing across the pods in the deployment.

- StatefulSet: Manages the deployment of a stateful application, in this case, a PostgreSQL database, ensuring ordered deployment, scaling, and management. This is critical for applications that need persistent storage and a stable network identity.

- Service (for StatefulSet): A headless service (

clusterIP: None) to control the network domain for the StatefulSet, demonstrating how Kubernetes manages stateful applications.

Applying the Configuration:

To apply this configuration to your Kubernetes cluster, save the YAML to a file (e.g., k8s-orchestration.yaml) and run:

kubectl apply -f k8s-orchestration.yamlThis setup showcases Kubernetes’ orchestration capabilities, including managing both stateless and stateful applications, auto-scaling based on workload, and providing a reliable load-balanced service for accessing the application.

Through these mechanisms, Kubernetes facilitates a highly efficient, resilient, and scalable DevOps workflow, enabling the seamless deployment and management of complex containerized applications.

Overall, Kubernetes orchestration capabilities provide organizations with the ability to manage complex containerized applications effectively. It streamlines the DevOps workflow, ensuring that the deployment process is efficient, reliable, and scalable.

Overcoming Challenges with Kubernetes in DevOps

Integrating Kubernetes into DevOps workflows can bring significant benefits to software development, but this process is not without its challenges.

Managing Scalability

One of the primary benefits of using Kubernetes is its ability to scale applications efficiently, but this also presents a challenge. As the number of containers, services, and nodes increase, managing and orchestrating them becomes more complex.

To overcome scalability challenges, it is crucial to use the right tools and frameworks for container management. Container orchestration platforms like Kubernetes provide automated scaling capabilities, ensuring your applications can handle spikes in traffic without compromising on performance.

Ensuring Security

As with any technology, security is a crucial consideration when deploying Kubernetes in a DevOps workflow. Security challenges can arise due to factors such as misconfigured pods, unauthorized access, or insecure network connections.

To mitigate these risks, it is essential to build security into your DevOps processes from the start. Implementing secure container registries, using network policies to isolate workloads, and enforcing access control are some of the key strategies for ensuring the security of your Kubernetes clusters.

Optimizing Performance

While Kubernetes provides an efficient way to manage and deploy applications, achieving optimal performance can be a challenge. Common issues include slow response times due to network latency, resource contention, and limited application monitoring.

To optimize performance, it is essential to have a robust monitoring and optimization strategy in place. This can include implementing performance metrics, identifying bottlenecks, and using autoscaling features to ensure that resources are allocated efficiently.

Continuous Improvement

Finally, continuous improvement is a critical consideration when deploying Kubernetes in DevOps workflows. As technology evolves, new challenges will arise, and it is crucial to stay up-to-date with the latest tools, frameworks, and best practices.

By prioritizing continuous improvement and staying abreast of industry trends, you can ensure that your Kubernetes deployment remains efficient, secure, and optimized for performance, enabling you to deliver high-quality applications consistently.

Final Thoughts

Integrating Kubernetes into DevOps workflows brings several benefits that enable organizations to streamline their application development and deployment processes. With Kubernetes, teams can achieve greater efficiency, scalability, and reliability.

By automating the deployment and management of containers and services, Kubernetes frees up time for developers and operations teams to focus on creating value for the business.

However, it is important to note that organizations may face challenges when integrating Kubernetes into their DevOps workflows. These can range from scalability and security to performance optimization. By leveraging best practices and implementing proactive monitoring and optimization strategies, organizations can overcome these challenges and reap the benefits of Kubernetes.

Ultimately, it is clear that Kubernetes has become a crucial tool for DevOps teams looking to accelerate product delivery, enhance collaboration, and drive innovation.

As the use of Kubernetes continues to grow, it is essential for organizations to stay up-to-date on the latest trends, tools, and best practices to maximize its potential.

External Resources

FAQ

1. How do I deploy an application to Kubernetes in a DevOps pipeline?

FAQ: How can I automate the deployment of a new version of my application to Kubernetes as part of my CI/CD pipeline?

Answer: Automating application deployment to Kubernetes within a DevOps pipeline typically involves using Continuous Integration (CI) tools like Jenkins, GitLab CI, or GitHub Actions. The following is an example of a GitHub Actions workflow that deploys an application to Kubernetes.

# .github/workflows/deploy-to-kubernetes.yml

name: Deploy to Kubernetes

on:

push:

branches:

- main

jobs:

build-and-deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Set up Kubectl

uses: azure/setup-kubectl@v1

- name: Set up Docker

uses: docker/setup-buildx-action@v1

- name: Login to DockerHub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKER_USERNAME }}

password: ${{ secrets.DOCKER_PASSWORD }}

- name: Build and Push Docker image

run: |

docker build . -t yourdockerhubusername/yourapp:${{ github.sha }}

docker push yourdockerhubusername/yourapp:${{ github.sha }}

- name: Deploy to Kubernetes

uses: azure/k8s-deploy@v1

with:

manifests: ./k8s/*

images: |

yourdockerhubusername/yourapp:${{ github.sha }}

namespace: yournamespace

kubectl-version: '1.18.0'This YAML defines a workflow that triggers on pushes to the main branch, builds a Docker image from the application, pushes it to Docker Hub, and deploys it to a Kubernetes cluster using predefined manifests.

2. How do I manage Kubernetes secrets in a DevOps workflow?

FAQ: How can I securely manage secrets for my Kubernetes applications in a continuous deployment workflow?

Answer: Kubernetes secrets should be managed securely and not stored or hard-coded in your application’s codebase. Tools like HashiCorp Vault, AWS Secrets Manager, or Kubernetes External Secrets can be used. Below is an example of how you might use Kubernetes External Secrets to manage secrets.

First, you need to deploy the External Secrets Kubernetes operator in your cluster:

kubectl apply -f https://raw.githubusercontent.com/external-secrets/kubernetes-external-secrets/master/manifests/kubernetes-external-secrets.yamlThen, you define an ExternalSecret resource that references the external secret storage (e.g., AWS Secrets Manager):

# external-secret.yaml

apiVersion: 'kubernetes-client.io/v1'

kind: ExternalSecret

metadata:

name: my-external-secret

spec:

backendType: secretsManager

data:

- key: /dev/ops/my-application-secrets

name: api-key

property: API_KEYThis setup automates the injection of secrets into your Kubernetes deployments, ensuring that sensitive data is securely managed and kept out of version control.

3. How do I configure autoscaling for my Kubernetes deployments?

FAQ: How can I set up autoscaling for my Kubernetes applications to ensure they handle load efficiently?

Answer: Kubernetes Horizontal Pod Autoscaler (HPA) can be used to automatically scale the number of pods in a deployment based on observed CPU utilization or other selected metrics. Below is an example of how to configure HPA for a deployment.

First, ensure metrics-server is deployed in your cluster. Then, define an HPA resource:

# hpa.yaml

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: my-application-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-application

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80This HPA configuration automatically adjusts the number of my-application pods to maintain an average CPU utilization across all pods of 80%.

4. How do I monitor my Kubernetes applications in a DevOps context?

FAQ: What’s a good approach to monitor Kubernetes applications to ensure high availability and performance?

Answer: Prometheus, coupled with Grafana, is widely used for monitoring Kubernetes applications. Here’s how you can set up Prometheus monitoring:

Deploy Prometheus using Helm:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm install my-prometheus prometheus-community/prometheusThen, configure Prometheus to scrape metrics from your applications and Kubernetes nodes. You can visualize these metrics using Grafana, which can also be deployed using Helm:

helm repo add grafana https://grafana.github.io/helm-charts

helm install my-grafana grafana/grafanaYou would then configure Grafana to use Prometheus as a data source to dashboard your application metrics.

5. How do I manage Kubernetes configurations across multiple environments?

FAQ: How can I efficiently manage and promote Kubernetes configurations across different environments (e.g., development, staging, production) in my DevOps pipeline?

Answer: Helm, a package manager for Kubernetes, can be used to manage configurations across different environments through the use of charts and values files. Here’s an example of deploying an application with environment-specific configurations using Helm:

helm upgrade --install my-application ./charts/my-application --values ./charts/my-application/values-production.yamlIn this command, values-production.yaml contains production-specific configuration values, overriding default values in your Helm chart. This approach enables you to maintain a single set of manifests for all environments, with variations defined in values files.

These FAQs and code samples provide a starting point for integrating Kubernetes into your DevOps practices, covering deployment, secret management, autoscaling, monitoring, and configuration management across environments.

James is an esteemed technical author specializing in Operations, DevOps, and computer security. With a master’s degree in Computer Science from CalTech, he possesses a solid educational foundation that fuels his extensive knowledge and expertise. Residing in Austin, Texas, James thrives in the vibrant tech community, utilizing his cozy home office to craft informative and insightful content. His passion for travel takes him to Mexico, a favorite destination where he finds inspiration amidst captivating beauty and rich culture. Accompanying James on his adventures is his faithful companion, Guber, who brings joy and a welcome break from the writing process on long walks.

With a keen eye for detail and a commitment to staying at the forefront of industry trends, James continually expands his knowledge in Operations, DevOps, and security. Through his comprehensive technical publications, he empowers professionals with practical guidance and strategies, equipping them to navigate the complex world of software development and security. James’s academic background, passion for travel, and loyal companionship make him a trusted authority, inspiring confidence in the ever-evolving realm of technology.